Post 20: Towards a general artificial intelligence 🧠

Published:

It seems crazy to me that one of the hottest topics in science right now is how to “teach” machines to think. How did we get here?

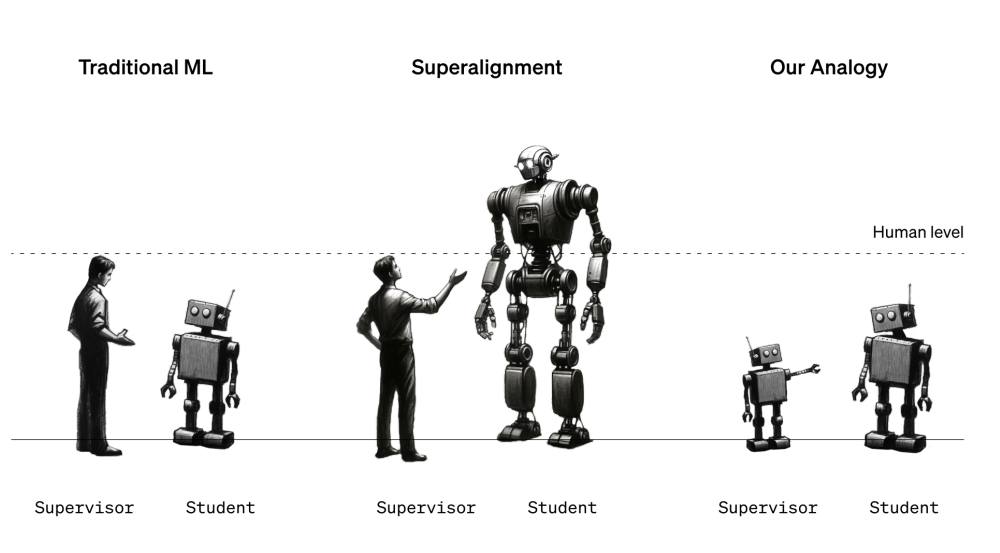

Personally, and perhaps because I’m a biologist, I don’t believe we’ll be able to create general AI in the next 10 years, as many AI enthusiasts bet (and I’m passionate about it too). Although the interest is so high that labs like OpenAI hire engineers with salaries of around ~1.2 million MXN per month to research how to teach machines to think through methods they’ve called “alignment,” even using other AIs to align the AIs themselves.

How do we learn compared to AIs? For example, AIs receive 5 times more information than a 5-year-old child, yet AIs cannot reach the child’s cognitive capacity (obviously). This is because we have prior concepts; we are constantly receiving signals through our senses, and these signals are associated with multiple meanings, like chocolate, which brings to mind its taste, smell, texture, context, etc. (something called multimodality in AI).

By estimating the amount of data used to train AIs versus the vocabulary we learn over our lives, they came up with this graph (Y-axis = amount of information, X-axis = Years), where we see that AIs like this one called Chinchilla (one of the most optimized) consume a lot of information, and yet, they don’t even come close to a child.

Refs: